HOT

Production-Quality Lenses Directly from SLA 3D P…

Production-Quality Lenses Directly from SLA 3D P… Forging Ahead with Solar Energy and GibbsCAM CNC…

Forging Ahead with Solar Energy and GibbsCAM CNC… GibbsCAM Software Helps Race-Team Mechanics Beco…

GibbsCAM Software Helps Race-Team Mechanics Beco… Metro Aerospace Introduces 3D Printed Part for F…

Metro Aerospace Introduces 3D Printed Part for F… GibbsCAM Enables Rover Exploration Vehicle Parts…

GibbsCAM Enables Rover Exploration Vehicle Parts… Designing a Custom Boot for an Injured Penguin

Designing a Custom Boot for an Injured Penguin Asano Uses Geomagic 3D Scan Software to Drive In…

Asano Uses Geomagic 3D Scan Software to Drive In…

Human-machine interfaces

Yerbolat Khassanov, Nursultan Imanberdiyev and Huseyin Atakan Varol (PI)

Advanced Robotics and Mechatronics Systems Laboratory (www.arms.nu.edu.kz)

Department of Robotics and Mechatronics

Nazarbayev University

Astana, Kazakhstan

Introduction

Application areas of autonomous robots have expanded significantly over the last decade. This happened mainly due to the progress achieved in fields of artificial intelligence and computer vision. However, there is no autonomous system yet which can guarantee a minimum level of reliability for applications of increased complexity. Instead, the shortcoming is surmounted by employing human intelligence for high-level robot control. This configuration on the other hand raises an issue of safety when the human presence at the robot site is undesirable or impossible, e.g. underwater exploration, nuclear/toxic/explosive material handling and disposal, search and rescue in dangerous/hazardous areas, etc. As a result, number of research projects have been carried out which developed various human-machine interfaces with an aim of overcoming the limitation.

The introduced human-machine interfaces for robotic systems include a variety of methods based on electromyographic muscular activity sensors, exoskeletal mechanical devices, joysticks, haptic device based and instrumented gloves. However, these devices mostly assume unnatural hand and body motions failing to utilize the full extent of the dexterous human motion capabilities. Vision-based [1-4] approaches have been developed to provide a higher bandwidth teleoperation interface between the user and the device. With the ability to obtain full-body kinematics, vision-based systems also introduce several shortcomings. Firstly, a high number of cameras is needed to prevent occlusion of the markers required for motion tracking. Secondly, user needs to operate in the capture volume of the cameras. Additionally, vision-based motion capture systems are subject to limited portability due to the fact that the component cameras are fixed to frames or tripods. Thirdly, infrared light sources and reflective objects in the capture volume can add various levels of noise and resulting erroneous decisions. To eliminate the noise, computationally expensive filtering and post-processing procedures are required to be implemented. In order to solve the aforementioned problems and provide a reliable, portable and real-time kinematic data acquisition, in this work authors designed the mobile manipulator teleoperation control system which utilizes inertial motion capture (IMC) suit.

Watch KUKA youBot mobile manipulator and Xsens MVN BIOMECH:

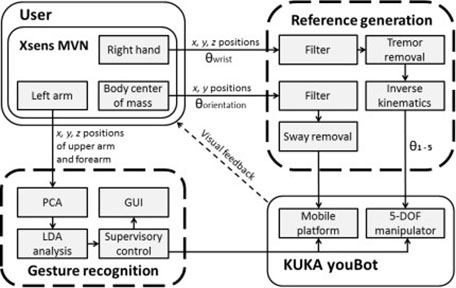

Specifically, the introduced human-robot interface system is comprised with a KUKA youBot mobile manipulator and Xsens MVN BIOMECH inertial motion capture system (IMC). MVN BIOMECH consists of 17 inertial motion trackers attached to the body using straps capable of providing real-time full body kinematic data. The KUKA youBot is an omnidirectional mobile platform with mecanum wheels, which accommodates five degrees of freedom robot manipulator. The robot arm is a series chain of five revolute joints with a removable two-finger gripper as end effector. Both the IMC system and the mobile manipulator communicate with main control computer wirelessly. The overview of the system is shown in Figure 1.

MVN BIOMECH acquires the user kinematic data with 50 Hz sampling rate. In order to create an intuitive mapping between the user and mobile manipulator, we decided to use the right hand position and orientation to generate the robot end effector position and orientation references. Inverse kinematics for the mobile manipulator arm is computed at each sampling time to convert hand position and orientation to robot joint angles. The omnidirectional base’s position and orientation are calculated from the human user’s body center of mass (CoM) position and orientation. The holonomic structure of the robot provides the ability to track any user CoM (as long as the maximum velocity constraints are satisfied). In order to remove movement artifacts due to body sway during walking and standing; and involuntary hand movements; the acquired kinematic data is firstly filtered. Additionally, we implemented simple sway and tremor removal algorithm to clean the motion references. In the involuntary movement removal algorithm, filtered new robot position reference samples are only given as final motion references for the mobile manipulator, if the Euclidian distance between the last and current sample is greater than a predefined distance. We set this distance to be 5 and 1 mm for the robot base and the arm, respectively. This allows us to filter out higher frequency lower amplitude motions provided by involuntary movements, and use only distinct and deliberate motions for motion reference generation.

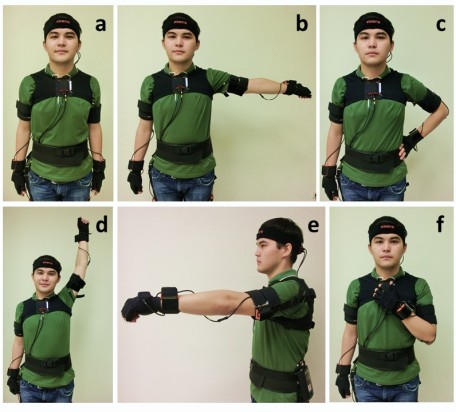

Left hand of the human user was designated to give high-level commands to the robot manipulator. For this, the IMC based left arm gesture recognizer was implemented. Six distinct left arm gestures were selected for providing high level commands to the teleoperation system. These gestures are hand forward, hand on chest, hand on waist, hand up, hand sideways and neutral. To increase the reliability of the gesture recognizer we employed a consensus voting scheme at the expense of increased delay. The algorithm considers ten consecutive classification results and generates the command only if all of the ten labels belong to the same class. Six gestures were associated with particular commands. Some of the gestures were used to toggle states of the system, for example “Manipulator On/Off”. The arm gestures and their corresponding commands are as follows:

• Hand forward gesture command activates/deactivates the mobile platform.

• Hand up gesture activates/deactivates the manipulator; deactivation moves manipulator to the initial position.

• Hand on chest gesture halts the manipulator at last acquired position or resumes it back from that position.

• Hand sideways gesture allows a user to switch between different manipulator motion modes.

• Hand on waist gesture is used to exit a selected mode.

• Neutral gesture does nothing, so that a user can rest his/her left hand.

An ability to visualize the recognized gestures and the status of the robot is provided through a graphical user interface. More detailed information about this project can be found in [5, 6].

Conclusion

In this project we present a novel Inertial Motion Capture based teleoperation system used for control of mobile robot manipulator. The introduced Xsens MVN BIOMECH overcomes most of the drawbacks and constraints of traditional and newly emerging vision-based teleoperation systems. Left hand based IMC gesture recognizer was implemented for high-level control of the robot. The classifier accuracy for the six class gesture recognition problem is 95.6 percent. In addition, graphical user interface was developed to enable user to track the output of gesture recognizer and status of the robot. The experiment comparing implemented system against keyboard interface was conducted, where IMC based interface outperforms the keyboard interface and shows promising results.

References

[1] J. Kofman, X. H. Wu, T. J. Luu, and S. Verma, “Teleoperation of a robot manipulator using a vision-based human-robot interface,” IEEE Transactions on Industrial Electronics, vol. 52, pp. 1206-1219, Oct 2005.

[2] S. Verma, J. Kofman, and X. H. Wu, “Application of markerless image-based arm tracking to robot-manipulator teleoperation,” 1st Canadian Conference on Computer and Robot Vision, Proceedings, pp. 201-208, 2004.

[3] C. Hu, M. Q. Meng, P. X. P. Liu, and X. Wang, “Visual gesture recognition for human-machine interface of robot teleoperation,” in Proceedings of the 2003 IEEE/RSJ International Conference on Intelligent Robots and Systems, 2003, pp. 1560-1565.

[4] W. B. Song, X. J. Guo, F. J. Jiang, S. Yang, G. X. Jiang, and Y. F. Shi, “Teleoperation humanoid robot control system based on Kinect sensor,” in International Conference on Intelligent Human-Machine Systems and Cybernetics, Nanchang, Jiangxi, 2012, pp. 264-267.

[5] Y. Khassanov, N. Imanberdiyev, and H. A. Varol, “Inertial motion capture based reference trajectory generation for a mobile manipulator,” presented at the Proceedings of the 2014 ACM/IEEE international conference on Human-robot interaction, Bielefeld, Germany, 2014.

[6] Y. Khassanov, N. Imanberdiyev, and H. A. Varol, “Real-time gesture recognition for the high-level teleoperation interface of a mobile manipulator,” presented at the Proceedings of the 2014 ACM/IEEE international conference on Human-robot interaction, Bielefeld, Germany, 2014.